Given the personal need of a JSON Schema validator implemented in pascal, i decided to write one and, naturally, with a test driven approach.

The JSON Schema organization maintains a language agnostic test suite. It's comprised of JSON files describing the specifications for each rule a validator must check.

I could write a program to convert the JSON specification to pascal units with the tests cases like i've done with mustache spec, but is far from optimal approach, imposing the need to recreate the test application each time a change is done in the spec.

So, i looked a way to create the tests dynamically reading directly the JSON files. A quick search lead me to the solution of creating a custom TTestCase class with a published method (named generically as Run) that implements the test. An instance of this class is created for each test, passing the appropriate data.

While it works, this approach has the drawback of the generic method name that would clutter the test runner output with meaningless information. To overcome this issue is possible to aggregate tests in one big test case, e.g., a unique test case for JSON Schema type rule, instead of creating one test case for each test description.

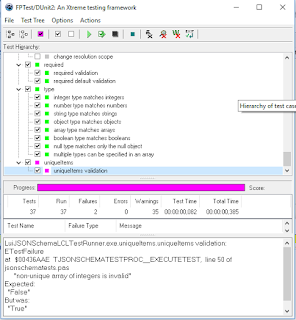

With the confidence that should exist a better solution, i digged into FPTest source code (a freepascal port of DUnit2) looking how i could have the best of two worlds, data driven dynamic tests with the granularity of handcraft tests.

Fortunately, i've got a way. The key is to subclass TTestProc and properly instantiate it .

TJSONSchemaTestProc = class(TTestProc)

private

FData: TJSONObject;

procedure ExecuteTest(SchemaData, TestData: TJSONObject);

procedure ExecuteTests;

public

constructor Create(Data: TJSONObject);

end;

constructor TJSONSchemaTestProc.Create(Data: TJSONObject);

begin

inherited Create(@ExecuteTests, '', @ExecuteTests, Data.Get('description', 'jsonschema-test'));

FData := Data;

end;

A TJSONObject with the test specification is passed in constructor. A not published method (ExecuteTests) is registered with the description of the test as name.procedure TJSONSchemaTestProc.ExecuteTest(SchemaData, TestData: TJSONObject);

var

Description: String;

ValidateResult: Boolean;

begin

Description := TestData.Get('description', '');

ValidateResult := ValidateJSON(TestData.Elements['data'], SchemaData);

if TestData.Booleans['valid'] then

CheckTrue(ValidateResult, Description)

else

CheckFalse(ValidateResult, Description);

end;

procedure TJSONSchemaTestProc.ExecuteTests;

var

SchemaData: TJSONObject;

TestsData: TJSONArray;

i: Integer;

begin

SchemaData := FData.Objects['schema'];

TestsData := FData.Arrays['tests'];

for i := 0 to TestsData.Count - 1 do

ExecuteTest(SchemaData, TestsData.Objects[i]);

end;

In ExecuteTests, the assertions (called in the specification tests) are executed one by one.With this i get a comprehensive test suite that allows to effectively drive the development.

While took me some time to understand the FPTest/DUnit2 source code, the solution ended simpler and clearer than i think earlier. In a way that i foresee using this technique for testing other projects, not only third party specifications.

BTW: the test runner source code can be found here